Word Stress Assessment for Computer Aided Language Learning

J. P. Arias, N. B. Yoma, and H. Vivanco

published in Interspeech, 2009

summarized by Davidson, 2011/09/09

back to homepage

1. Introduction

- Language and text independent

- No transcript is required

- But requires a reference utterance

- The reference and student utterances are compared to decide whether the stress patterns are the same.

- F0 contour and frame energy contour are used for the comparison.

- DTW algorithm with MFCCs are used to align the two utterances.

2. The proposed system

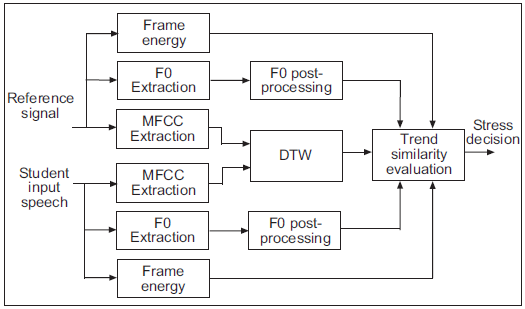

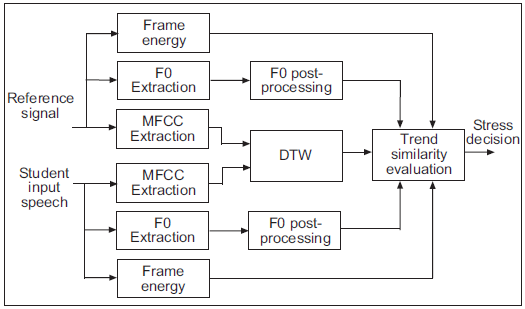

|

| Figure 1: Block diagram of the proposed system |

- Both utterances are aligned by DTW with MFCCs.

- F0 and frame energy contours are used to evaluate the trend similarity and to make the stress decision

- xxx

- Duration

- Syllable duration vs. Syllable nucleus duration:

|

|

| Syllable duration |

Syllable nucleus duration |

Figure 1: Gaussian approximation of duration measures of non-prominent

(solid lines) and prominent syllables (dash-dotted lines). |

- Almost the same recognition rates are obtained --> we can substitute the syllable duration to a rather reliable syllable nucleus duration

- Normalized by ROS (rate of speech, or simply speech rate) (# of phonemes per unit time)

- Energy

- Syllable nucleus RMS energy

- Normalized by dividing it over the mean energy of the utterance

- Fundamental frequency (F0) contour

- Post-process to smooth out the contour and a final interpolation between voiced regions to obtain a continuous profile

- Spectral emphasis

- RMS energy of the signal in 500~2000Hz obtained from a bandpass FIR (finite impulse response) filter

3. Experiments

- Stress detector

- Past literature showed that the most reliable acoustic correlates of syllable stress are syllable duration and energy

- Stressed syllables exhibit a longer duration and greater energy in the mid-to-high frequency band

|

|

| Prominent syllables |

Non-prominent syllables |

Figure 2: Scattered plots of prominent and non-prominent syllables

in terms of log-normalized duration and log-normalized spectral emphasis |

- Overlapping regions exist due to the reason that stress is the only contributin parameters here, but prominence is dependent on both stress and pitch accent.

- Dashed lines represents the decision boundary formed by multivariate Gaussian distributions of the prominent and non-prominent syllables.

- Both acoustic parameters are logged to achieve a better fit to a Gaussian distribution.

- Pitch accent detector

- Intonation event method

- Use RFC model (rise/fall/connection) parameters to uniquely describe pitch accent shapes, called the TILT parameter set

- The F0 contour is divided into 25ms frames and data in each frame is linearly interpolated using a least median squares method to obtain robust regression and deletion of outliers

- Classify each frame as R/F/C depending on its gradient

- Merge all adjacent frames into one interval (an intonational event) if they have the same RFC classification

- Possible candidates of pitch accent are the intonational events that exhibits a rise followed by a fall profile, where the rise section is more relevant to prominence.

- Sluijter and van Heuven suggested that pitch accent can be detected by overall syllable energy and pitch variation.

- Pitch variation --> event amplitude (EvAmp) = |rise amplitude| + |fall amplitude| --> EvAmp * EvDur * EvRel where EvDur = event duration and EvRel = relevance of the event along the utterance (???)

- Intonation events

|

|

| Prominent syllables |

Non-prominent syllables |

Figure 3: Scattered plots of prominent and non-prominent syllables in terms of

log-normalized overall syllable energy and log-normalized intonational event parameters |

- As before, dashed lines represents the decision boundary formed by multivariate Gaussian distributions of the prominent and non-prominent syllables.

- Prominence detector

- Prominence detector = stress detector + pitch accent detector

- Prominence syllable = stress syllable or pitch accented syllable

- Experiment was done on a subset of TIMIT corpus

- Training set: 3637 syllables, 25 speakers

- Test set: 3643 syllables, 26 speakers

- Detection result

ˇ@ˇ@ˇ@ˇ@ˇ@Detected as

Syllable typeˇ@ˇ@ˇ@ˇ@ˇ@ | Stressed | Pitch Accented | Stressed+

Pitch Accented | None |

|---|

| Prominent | 650 | 53 | 280 | 271 |

|---|

| Non-Prominent | 314 | 41 | 50 | 1984 |

|---|

- Recognition rate = (650+53+280+1983) / 3643 = 81.44%

- Insertion rate = (314+41+50) / 3643 = 11.12%

- Deletion rate = 271 / 3643 = 7.44%

4. Results and discussion

- Performance agrees with the inter-rater agreement rate of around 80% in past research

- 82% for distinguishing high/low/neutral tone, rated by two human raters by inspecting pitch height only

- 77% for distinguishing high/low tone only

back to homepage

last updated: 2011/08/29