Tutorial on tone recognition for isolated characters

This tutorial explains the basics of Mandarin tone recognition for isolated characters. The dataset is availabe upon request.

Contents

Preprocessing

Before we start, let's add necessary toolboxes to the search path of MATLAB:

addpath d:/users/jang/matlab/toolbox/utility addpath d:/users/jang/matlab/toolbox/sap addpath d:/users/jang/matlab/toolbox/machineLearning

All the above toolboxes can be downloaded from the author's toolbox page. Make sure you are using the latest toolboxes to work with this script.

For compatibility, here we list the platform and MATLAB version that we used to run this script:

fprintf('Platform: %s\n', computer); fprintf('MATLAB version: %s\n', version); fprintf('Script starts at %s\n', char(datetime)); scriptStartTime=tic; % Timing for the whole script

Platform: PCWIN64 MATLAB version: 9.6.0.1214997 (R2019a) Update 6 Script starts at 18-Jan-2020 20:53:27

Dataset collection

First of all, we shall collect all the recording data from the corpus directory.

audioDir='D:\dataSet\mandarinTone\msar-2013'; fileCount=100; auSet=recursiveFileList(audioDir, 'wav'); %auSet=auSet(1:length(auSet)/fileCount:end); % Use only a subset for simplicity auSet=auSet(1:fileCount); fprintf('Collected %d recordings...\n', length(auSet));

Collected 100 recordings...

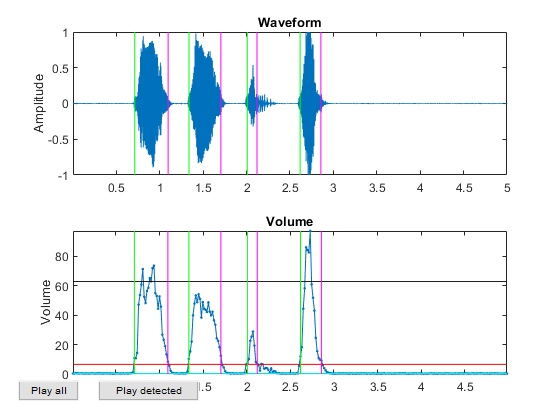

Since each recording contains 4 tones, we need to perform endpoint detection in order to have 4 segments corresponding to these 4 tones:

trOpt=trOptSet; fprintf('Perform endpoint detection...\n'); fs=16000; %if ~exist('auSet.mat', 'file') tic epdOpt=trOpt.epdOpt; for i=1:length(auSet) fprintf('%d/%d, file=%s\n', i, length(auSet), auSet(i).path); au=myAudioRead(auSet(i).path); [~, ~, segment]=epdByVol(au, epdOpt, 1); % if length(segment)~=4, fprintf('Press...'); pause; fprintf('\n'); end auSet(i).segment=segment; auSet(i).segmentCount=length(segment); auSet(i).au=au; end toc fprintf('Saving auSet.mat...\n'); save auSet auSet %else % fprintf('Loading auSet.mat...\n'); % load auSet.mat %end

Perform endpoint detection... 1/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/bu.wav 2/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/duan.wav 3/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/dui.wav 4/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/gao.wav 5/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/han.wav 6/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/hua.wav 7/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/ji.wav 8/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/kun.wav 9/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/le.wav 10/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/lei.wav 11/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/nang.wav 12/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/nong.wav 13/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/pin.wav 14/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/ren.wav 15/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/seng.wav 16/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/shi.wav 17/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/shou.wav 18/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/tian.wav 19/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/tuo.wav 20/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/xia.wav 21/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/you.wav 22/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/yun.wav 23/100, file=D:\dataSet\mandarinTone\msar-2013/b00202071/zhe.wav 24/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/chuan.wav 25/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/deng.wav 26/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/diu.wav 27/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/fei.wav 28/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/gen.wav 29/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/lan.wav 30/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/lun.wav 31/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/mang.wav 32/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/pian.wav 33/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/po.wav 34/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/qiang.wav 35/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/qie.wav 36/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/que.wav 37/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/shai.wav 38/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/shuo.wav 39/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/si.wav 40/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/sou.wav 41/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/tao.wav 42/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/ti.wav 43/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/wen.wav 44/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/yong.wav 45/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/yu.wav 46/100, file=D:\dataSet\mandarinTone\msar-2013/b00901078/ze.wav 47/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ao.wav 48/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ban.wav 49/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ce.wav 50/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/chan.wav 51/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/fang.wav 52/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ju.wav 53/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/lun.wav 54/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/mai.wav 55/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/na.wav 56/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/niu.wav 57/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ping.wav 58/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/qiang.wav 59/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ruo.wav 60/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/shuan.wav 61/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/shui.wav 62/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/si.wav 63/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/sou.wav 64/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/su.wav 65/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ti.wav 66/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/xiao.wav 67/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/xue.wav 68/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/zang.wav 69/100, file=D:\dataSet\mandarinTone\msar-2013/b97902077/zong.wav 70/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/a.wav 71/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/cang.wav 72/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/cong.wav 73/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/diao.wav 74/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/ei.wav 75/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/eng.wav 76/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/gou.wav 77/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/gu.wav 78/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/la.wav 79/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/liu.wav 80/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/ming.wav 81/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/ni.wav 82/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/nyue.wav 83/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/pan.wav 84/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/qu.wav 85/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/ruan.wav 86/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/rui.wav 87/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/se.wav 88/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/shan.wav 89/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/wang.wav 90/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/xiang.wav 91/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/zhao.wav 92/100, file=D:\dataSet\mandarinTone\msar-2013/b98505039/zuo.wav 93/100, file=D:\dataSet\mandarinTone\msar-2013/b98901024/cai.wav 94/100, file=D:\dataSet\mandarinTone\msar-2013/b98901024/chun.wav 95/100, file=D:\dataSet\mandarinTone\msar-2013/b98901024/die.wav 96/100, file=D:\dataSet\mandarinTone\msar-2013/b98901024/eng.wav 97/100, file=D:\dataSet\mandarinTone\msar-2013/b98901024/fo.wav 98/100, file=D:\dataSet\mandarinTone\msar-2013/b98901024/hen.wav 99/100, file=D:\dataSet\mandarinTone\msar-2013/b98901024/kou.wav 100/100, file=D:\dataSet\mandarinTone\msar-2013/b98901024/lao.wav Elapsed time is 21.802770 seconds. Saving auSet.mat...

Since our endpoint detection cannot always successfully find these 4 segments, we can simply remove those recordings which cannot be correctly segmented:

keepIndex=[auSet.segmentCount]==4;

auSet=auSet(keepIndex);

fprintf('Keep %d recordings for further analysis\n', length(auSet));

Keep 82 recordings for further analysis

After this step, each recording should have 4 segments corresponding to 4 tones. Then we can perform pitch training on these segments:

fprintf('Pitch tracking...\n'); ptOpt=trOpt.ptOpt; ptOpt.pitchDiffMax=2; for i=1:length(auSet) fprintf('%d/%d, file=%s\n', i, length(auSet), auSet(i).path); for j=1:length(auSet(i).segment) au=auSet(i).au; au.signal=au.signal(auSet(i).segment(j).beginSample:auSet(i).segment(j).endSample); % auSet(i).segment(j).pitch=pitchTrackForcedSmooth(au, ptOpt); auSet(i).segment(j).pitchObj=pitchTrack(au, ptOpt); end end fprintf('Saving auSet.mat after pitch tracking...\n'); save auSet auSet

Pitch tracking... 1/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/bu.wav 2/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/duan.wav 3/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/dui.wav 4/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/gao.wav 5/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/han.wav 6/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/hua.wav 7/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/ji.wav 8/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/kun.wav 9/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/le.wav 10/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/lei.wav 11/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/nang.wav 12/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/nong.wav 13/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/pin.wav 14/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/ren.wav 15/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/seng.wav 16/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/shou.wav 17/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/tian.wav 18/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/tuo.wav 19/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/xia.wav 20/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/you.wav 21/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/yun.wav 22/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/zhe.wav 23/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/chuan.wav 24/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/deng.wav 25/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/diu.wav 26/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/fei.wav 27/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/gen.wav 28/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/lun.wav 29/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/mang.wav 30/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/pian.wav 31/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/po.wav 32/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/si.wav 33/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/sou.wav 34/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/tao.wav 35/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/ti.wav 36/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/wen.wav 37/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/yong.wav 38/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/yu.wav 39/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ao.wav 40/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ban.wav 41/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ce.wav 42/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/chan.wav 43/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/fang.wav 44/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ju.wav 45/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/lun.wav 46/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/mai.wav 47/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/na.wav 48/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/niu.wav 49/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ping.wav 50/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/qiang.wav 51/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ruo.wav 52/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/shuan.wav 53/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/shui.wav 54/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/si.wav 55/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/sou.wav 56/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/su.wav 57/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ti.wav 58/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/xiao.wav 59/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/xue.wav 60/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/zang.wav 61/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/zong.wav 62/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/cang.wav 63/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/cong.wav 64/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/ei.wav 65/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/eng.wav 66/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/gou.wav 67/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/ming.wav 68/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/pan.wav 69/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/rui.wav 70/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/se.wav 71/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/shan.wav 72/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/xiang.wav 73/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/zhao.wav 74/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/zuo.wav 75/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/cai.wav 76/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/chun.wav 77/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/die.wav 78/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/eng.wav 79/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/fo.wav 80/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/hen.wav 81/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/kou.wav 82/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/lao.wav Saving auSet.mat after pitch tracking...

After pitch tracking, we need to extracxt features. This is accomplished in the following 4 steps:

- Interpolate the original pitch to have a fixed length of 100.

- Subtract the mean of the interpolated pitch, such that its average value is 0.

- Use a 3-order polynomial to fit the interpolated pitch, and use the returned 4 coefficients as the features for tone recognition.

fprintf('Feature extraction...\n'); fprintf('Order for polynomial fitting=%d\n', trOpt.feaOpt.polyOrder); for i=1:length(auSet) fprintf('%d/%d, file=%s\n', i, length(auSet), auSet(i).path); for j=1:length(auSet(i).segment) pitchObj=auSet(i).segment(j).pitchObj; pitchNorm=pitchObj.pitch-mean(pitchObj.pitch); x=linspace(-1, 1, length(pitchNorm)); % coef=polyfit(x, pitchNorm, trOpt.feaOpt.polyOrder); % Common polynomial fitting coef=polyFitChebyshev(pitchNorm, trOpt.feaOpt.polyOrder); % Chebysheve polynomial fitting. Why pitchNorm does not give better performance? auSet(i).segment(j).coef=coef(:); temp=interp1(x, pitchNorm, linspace(-1,1)); % For plotting only auSet(i).segment(j).pitchNorm=temp(:); % For plotting only end end

Feature extraction... Order for polynomial fitting=3 1/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/bu.wav 2/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/duan.wav 3/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/dui.wav 4/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/gao.wav 5/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/han.wav 6/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/hua.wav 7/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/ji.wav 8/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/kun.wav 9/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/le.wav 10/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/lei.wav 11/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/nang.wav 12/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/nong.wav 13/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/pin.wav 14/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/ren.wav 15/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/seng.wav 16/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/shou.wav 17/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/tian.wav 18/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/tuo.wav 19/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/xia.wav 20/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/you.wav 21/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/yun.wav 22/82, file=D:\dataSet\mandarinTone\msar-2013/b00202071/zhe.wav 23/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/chuan.wav 24/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/deng.wav 25/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/diu.wav 26/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/fei.wav 27/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/gen.wav 28/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/lun.wav 29/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/mang.wav 30/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/pian.wav 31/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/po.wav 32/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/si.wav 33/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/sou.wav 34/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/tao.wav 35/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/ti.wav 36/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/wen.wav 37/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/yong.wav 38/82, file=D:\dataSet\mandarinTone\msar-2013/b00901078/yu.wav 39/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ao.wav 40/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ban.wav 41/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ce.wav 42/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/chan.wav 43/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/fang.wav 44/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ju.wav 45/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/lun.wav 46/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/mai.wav 47/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/na.wav 48/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/niu.wav 49/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ping.wav 50/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/qiang.wav 51/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ruo.wav 52/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/shuan.wav 53/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/shui.wav 54/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/si.wav 55/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/sou.wav 56/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/su.wav 57/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/ti.wav 58/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/xiao.wav 59/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/xue.wav 60/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/zang.wav 61/82, file=D:\dataSet\mandarinTone\msar-2013/b97902077/zong.wav 62/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/cang.wav 63/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/cong.wav 64/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/ei.wav 65/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/eng.wav 66/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/gou.wav 67/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/ming.wav 68/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/pan.wav 69/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/rui.wav 70/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/se.wav 71/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/shan.wav 72/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/xiang.wav 73/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/zhao.wav 74/82, file=D:\dataSet\mandarinTone\msar-2013/b98505039/zuo.wav 75/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/cai.wav 76/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/chun.wav 77/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/die.wav 78/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/eng.wav 79/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/fo.wav 80/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/hen.wav 81/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/kou.wav 82/82, file=D:\dataSet\mandarinTone\msar-2013/b98901024/lao.wav

Once we have all the features for the recordings, we can create the dataset for further exploration.

segment=[auSet.segment]; ds.input=[]; ds.output=[]; for i=1:4 toneData(i).segment=segment(i:4:end); end for i=1:4 ds.input=[ds.input, [toneData(i).segment.coef]]; ds.output=[ds.output, i*ones(1, length(toneData(i).segment))]; end ds.outputName={'tone1', 'tone2', 'tone3', 'tone4'}; inputNum=size(ds.input, 1); for i=1:inputNum ds.inputName{i}=sprintf('c%d', i-1); %c1, c2, c3, etc end fprintf('Saving ds.mat...\n'); save ds ds

Saving ds.mat...

Dataset visualization

Once we have every piece of necessary information stored in "ds", we can invoke many different functions in Machine Learning Toolbox for data visualization and classification.

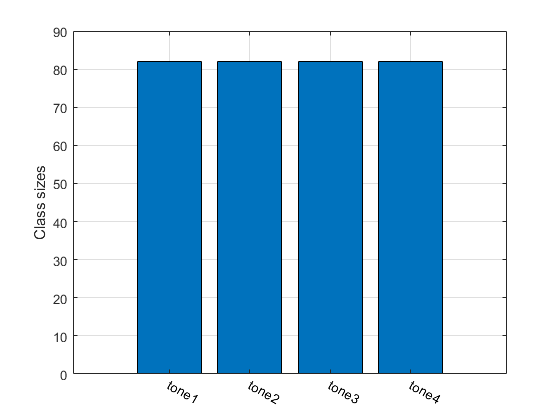

For instance, we can display the size of each class:

figure; [classSize, classLabel]=dsClassSize(ds, 1);

4 features 328 instances 4 classes

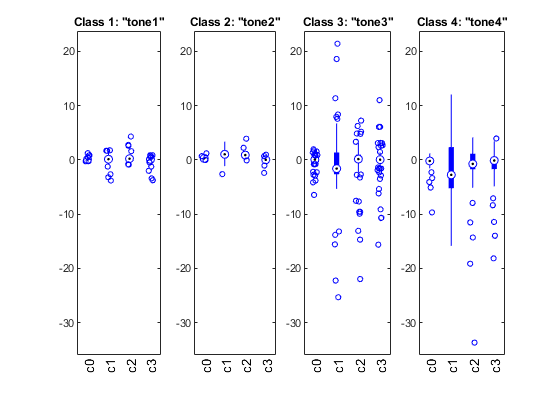

We can plot the distribution of each features within each class:

figure; dsBoxPlot(ds);

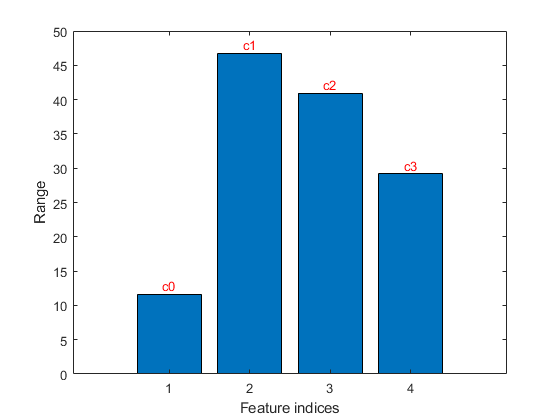

The box plots indicate the ranges of the features vary a lot. To verify, we can simply plot the range of features of the dataset:

figure; dsRangePlot(ds);

Big range difference cause problems in distance-based classification. To avoid this, we can simply normalize the features:

ds2=ds; ds2.input=inputNormalize(ds2.input);

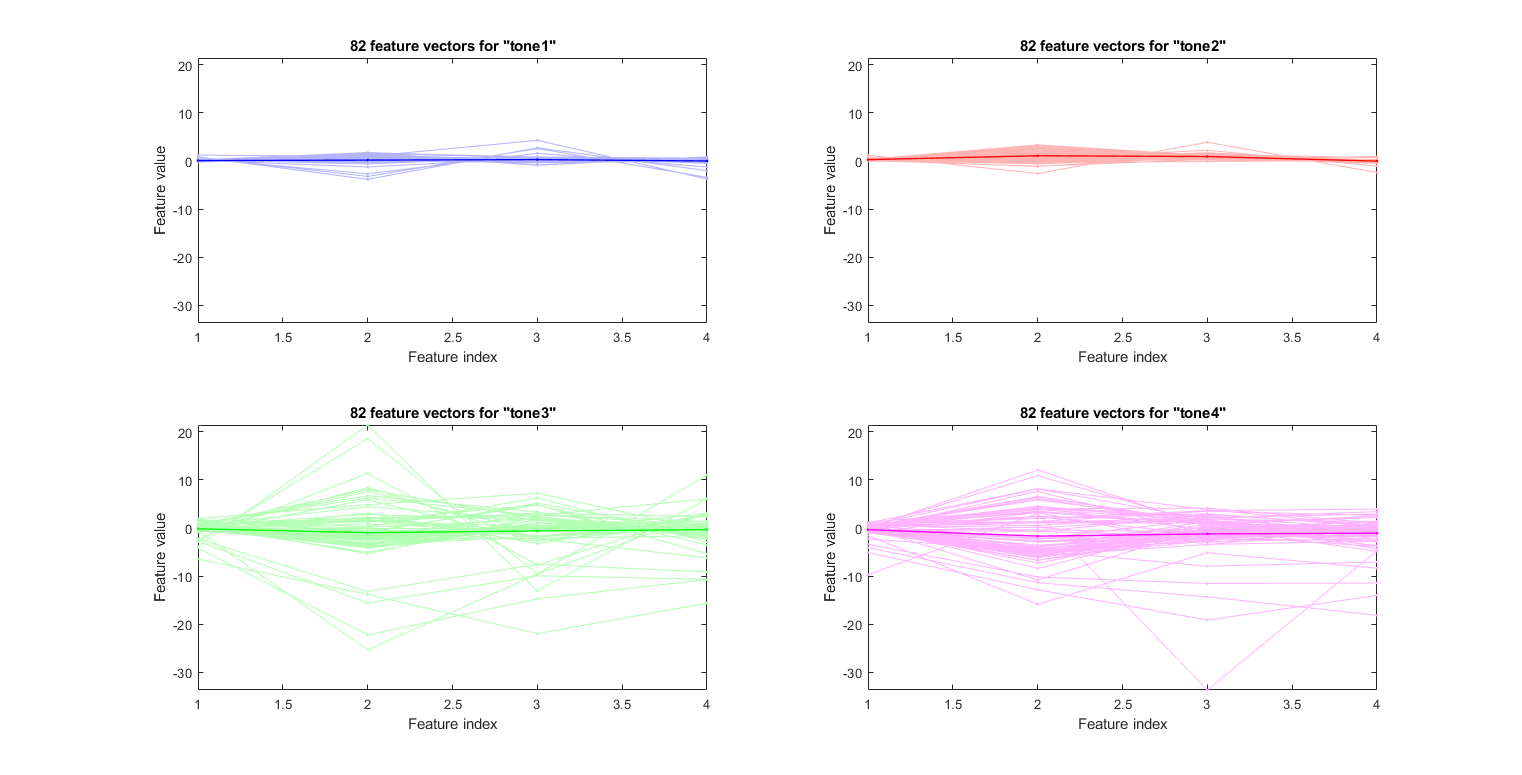

We can plot the feature vectors within each class:

figure; dsFeaVecPlot(ds); figEnlarge;

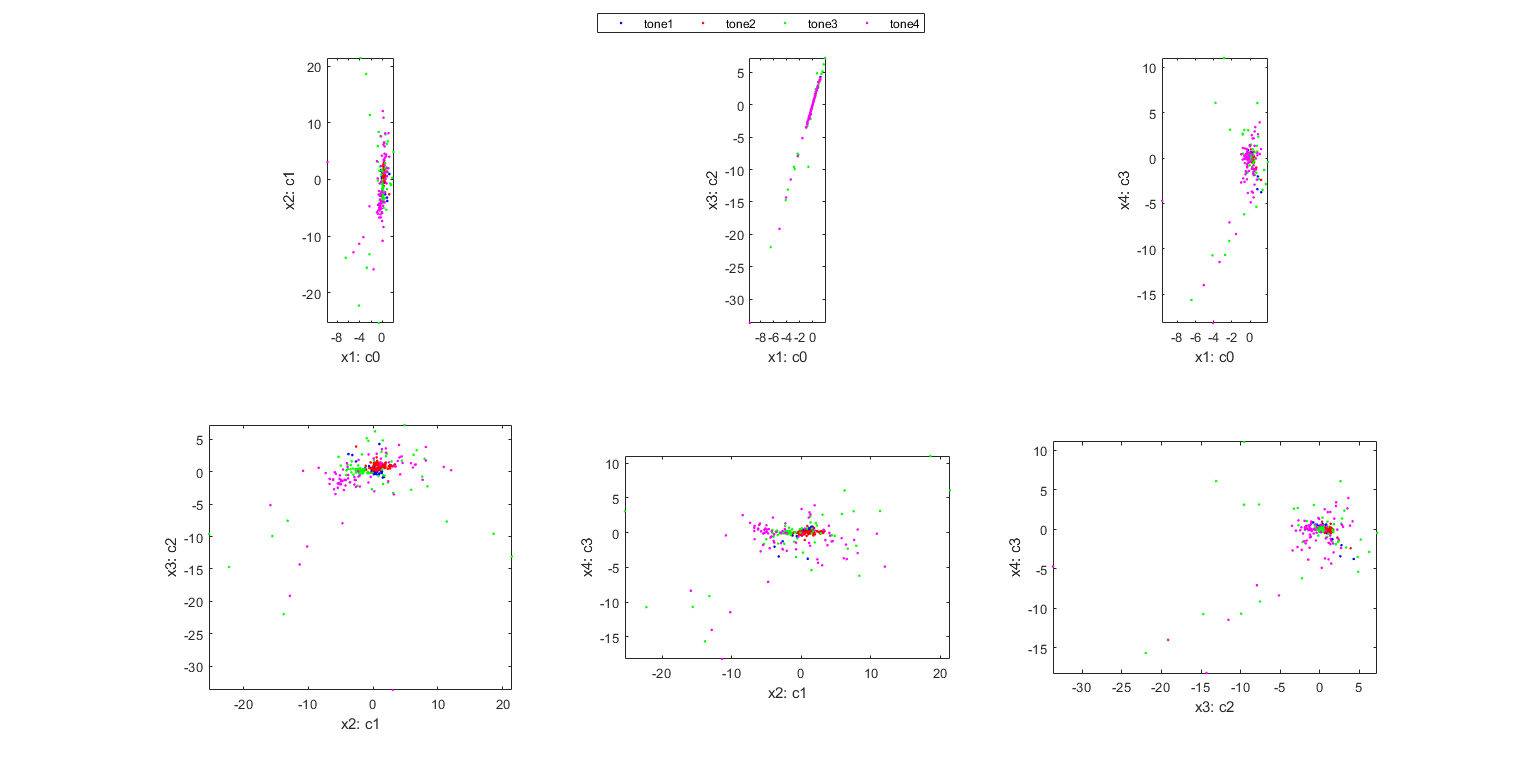

We can do the scatter plots on every 2 features:

figure; dsProjPlot2(ds); figEnlarge;

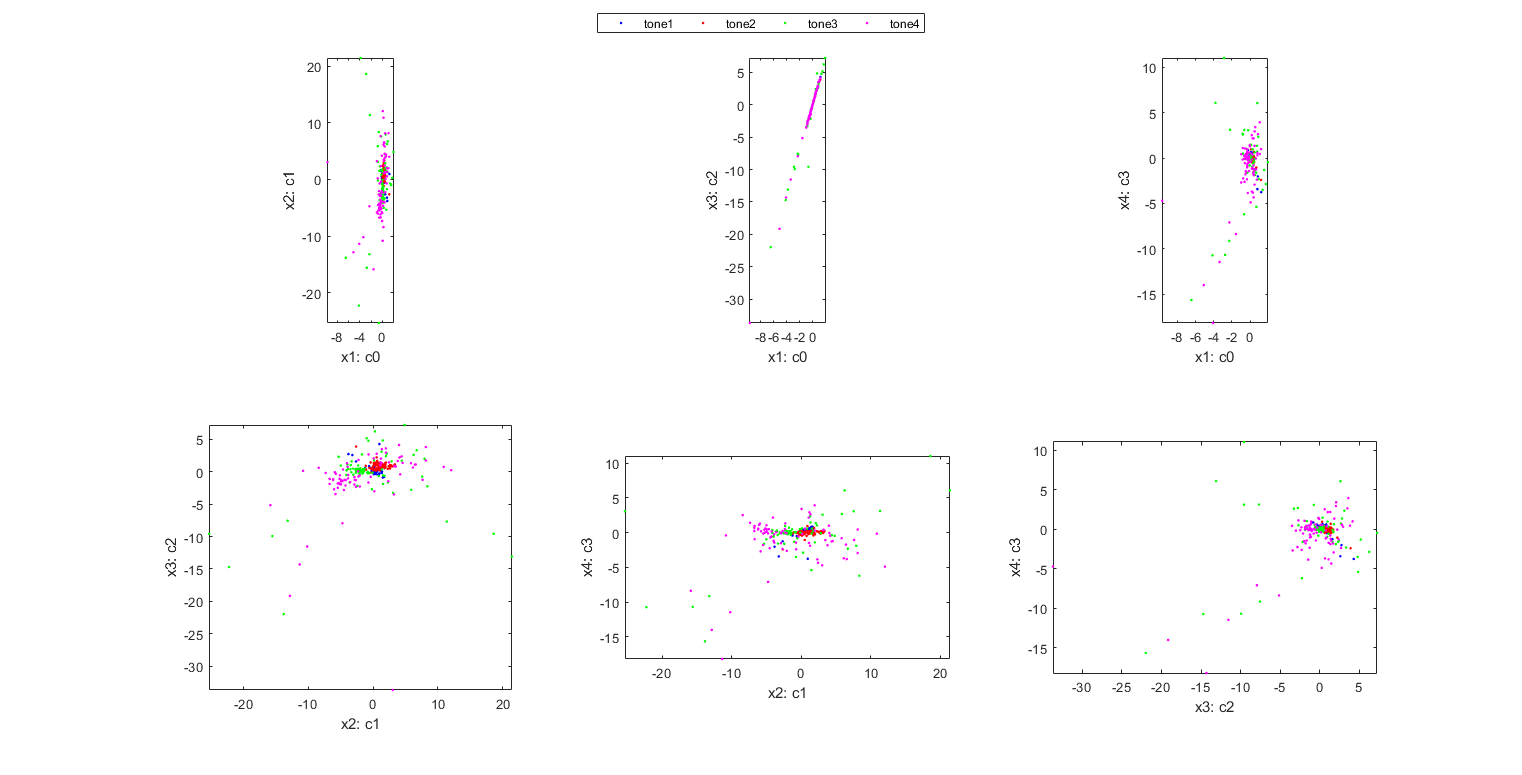

It is hard to see the above plots due to a large difference in the range of each features. We can try the same plot with normalized inputs:

figure; dsProjPlot2(ds2); figEnlarge;

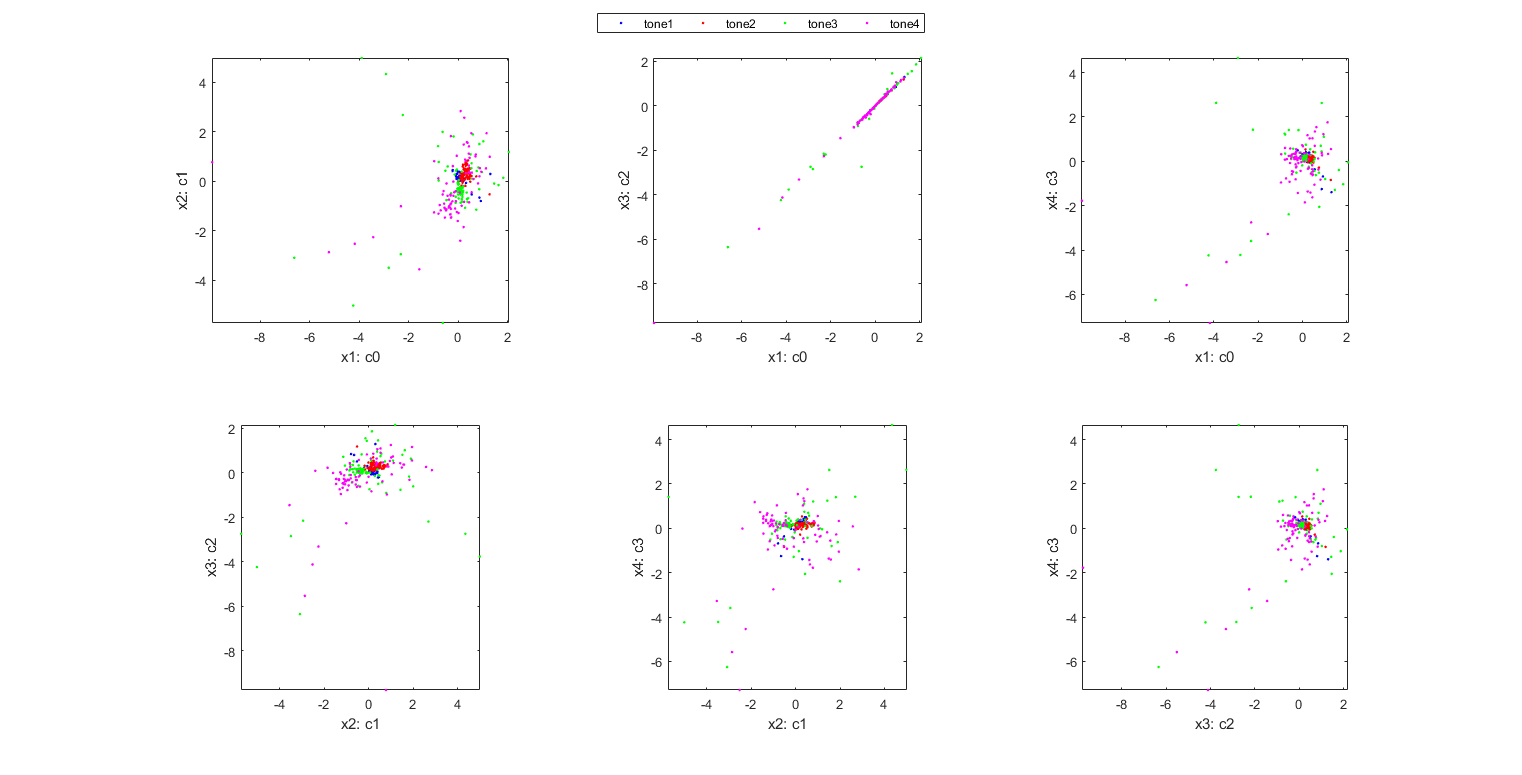

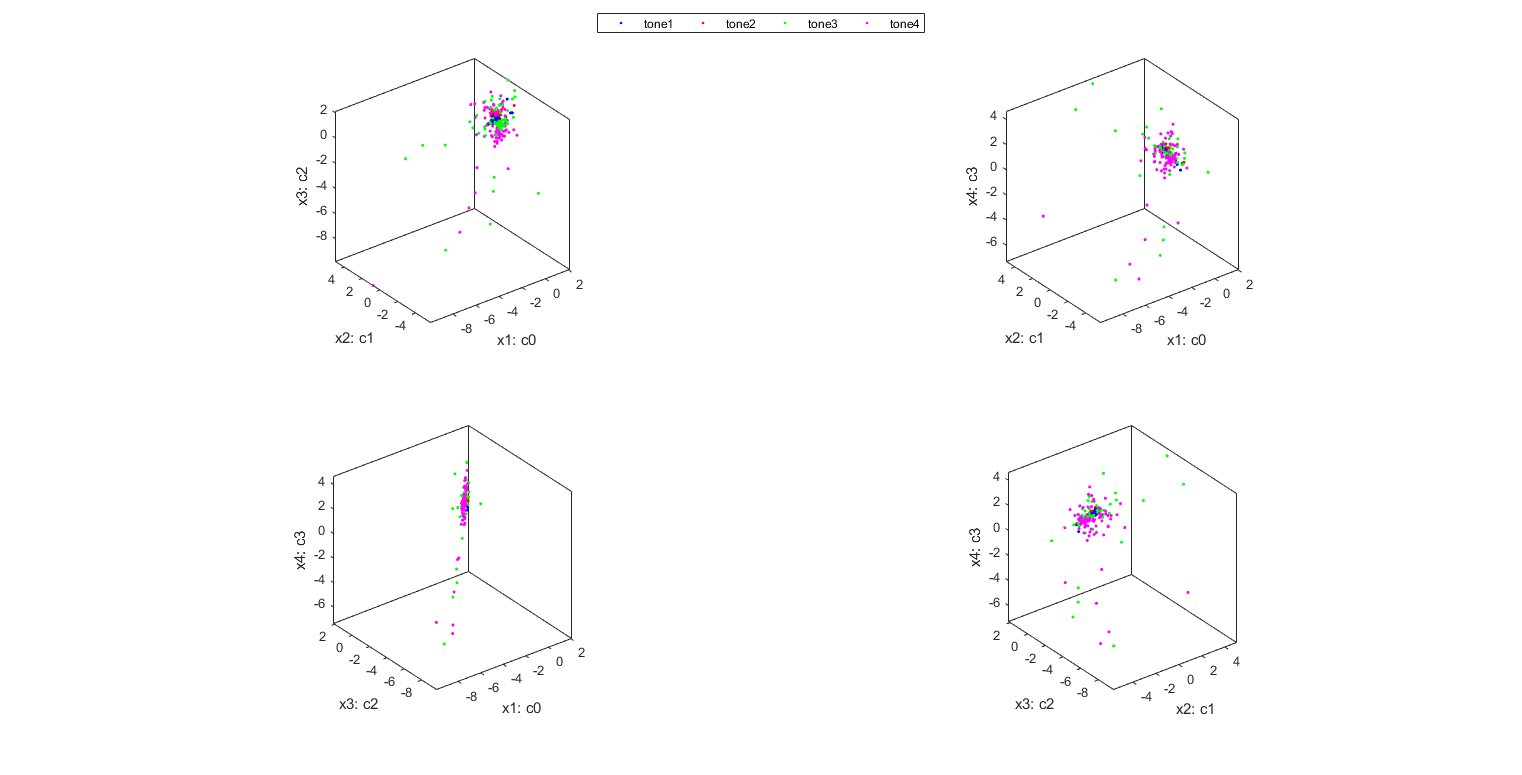

We can also do the scatter plots in the 3D space:

figure; dsProjPlot3(ds2); figEnlarge;

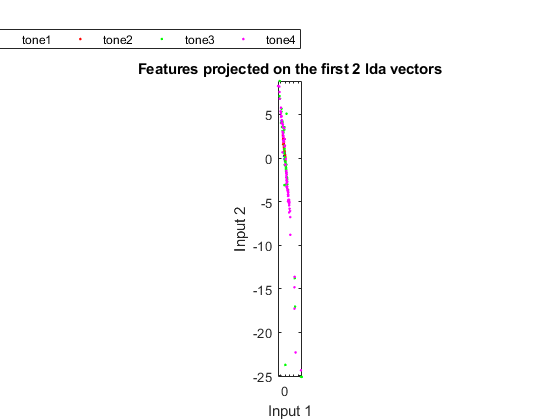

In order to visualize the distribution of the dataset, we can project the original dataset into 2-D space. This can be achieved by LDA (linear discriminant analysis):

ds2d=lda(ds); ds2d.input=ds2d.input(1:2, :); figure; dsScatterPlot(ds2d); xlabel('Input 1'); ylabel('Input 2'); title('Features projected on the first 2 lda vectors');

Classification

We can try the most straightforward KNNC (k-nearest neighbor classifier):

rr=knncLoo(ds);

fprintf('rr=%g%% for ds\n', rr*100);

rr=71.6463% for ds

For normalized dataset, usually we can obtain a better accuracy:

[rr, computed]=knncLoo(ds2);

fprintf('rr=%g%% for ds2 of normalized inputs\n', rr*100);

rr=71.6463% for ds2 of normalized inputs

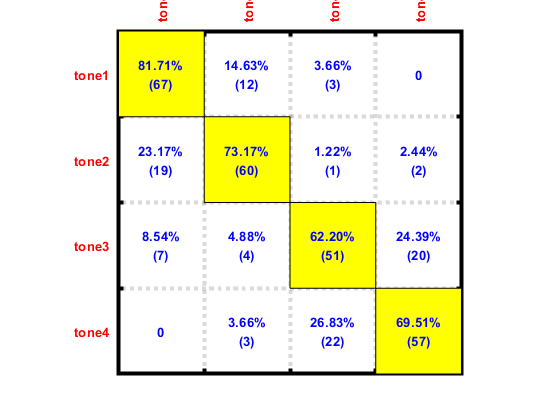

We can plot the confusion matrix:

confMat=confMatGet(ds2.output, computed); opt=confMatPlot('defaultOpt'); opt.className=ds.outputName; opt.mode='both'; figure; confMatPlot(confMat, opt);

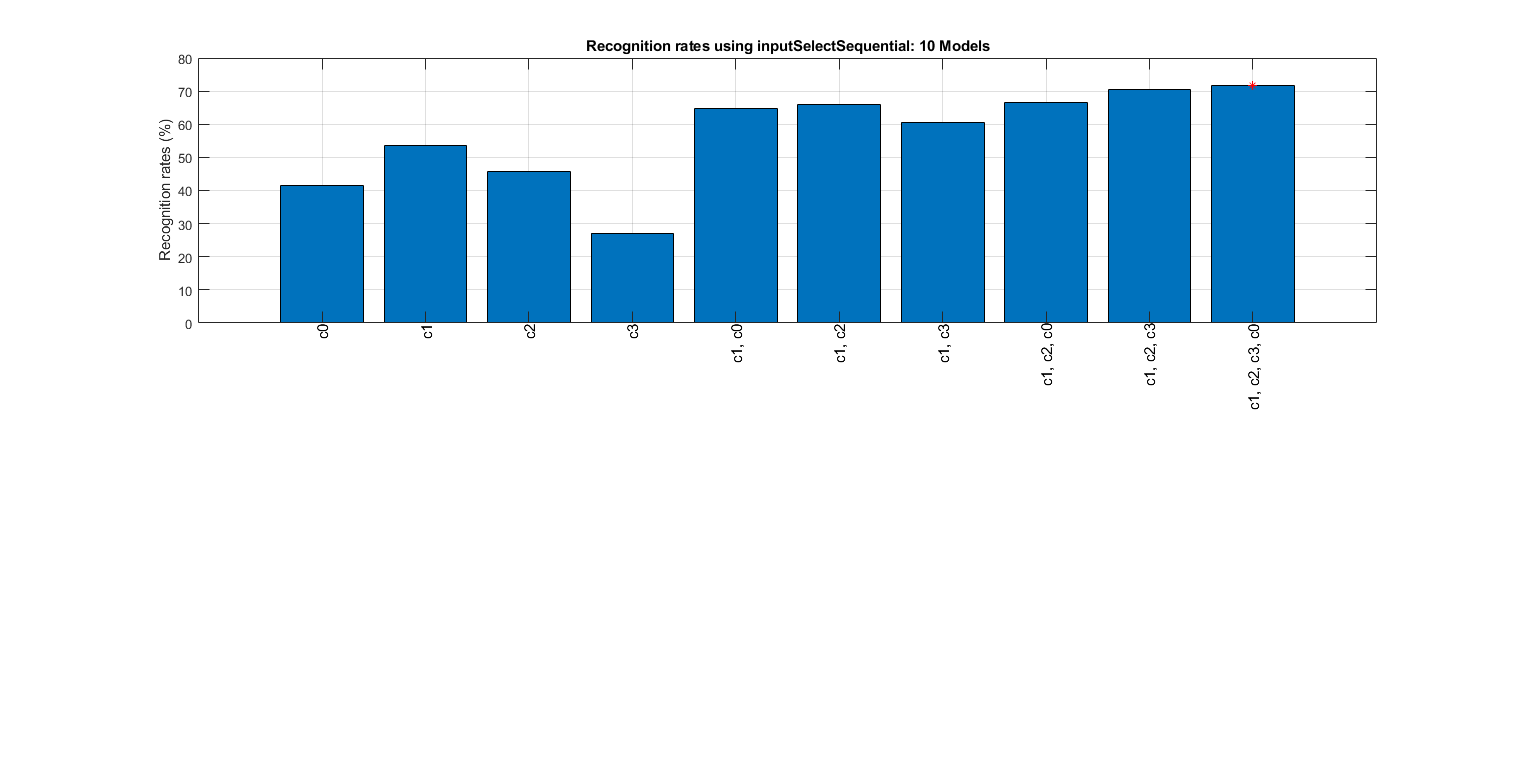

We can perform input selection to find the best features:

myTic=tic;

figure; bestInputIndex=inputSelectSequential(ds2); figEnlarge;

fprintf('time=%g sec\n', toc(myTic));

Construct 10 "knnc" models, each with up to 4 inputs selected from all 4 inputs...

Selecting input 1:

Model 1/10: selected={c0} => Recog. rate = 41.5%

Model 2/10: selected={c1} => Recog. rate = 53.7%

Model 3/10: selected={c2} => Recog. rate = 45.7%

Model 4/10: selected={c3} => Recog. rate = 27.1%

Currently selected inputs: c1 => Recog. rate = 53.7%

Selecting input 2:

Model 5/10: selected={c1, c0} => Recog. rate = 64.6%

Model 6/10: selected={c1, c2} => Recog. rate = 65.9%

Model 7/10: selected={c1, c3} => Recog. rate = 60.7%

Currently selected inputs: c1, c2 => Recog. rate = 65.9%

Selecting input 3:

Model 8/10: selected={c1, c2, c0} => Recog. rate = 66.5%

Model 9/10: selected={c1, c2, c3} => Recog. rate = 70.4%

Currently selected inputs: c1, c2, c3 => Recog. rate = 70.4%

Selecting input 4:

Model 10/10: selected={c1, c2, c3, c0} => Recog. rate = 71.6%

Currently selected inputs: c1, c2, c3, c0 => Recog. rate = 71.6%

Overall maximal recognition rate = 71.6%.

Selected 4 inputs (out of 4): c1, c2, c3, c0

time=0.132089 sec

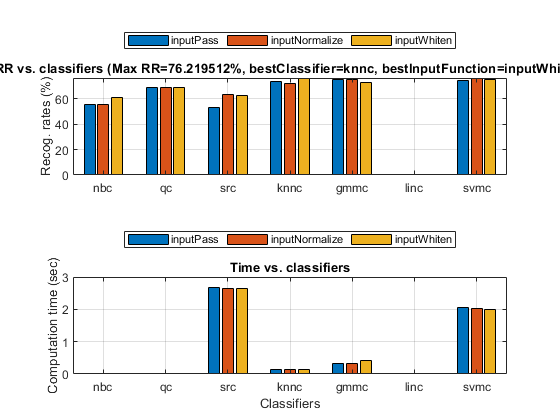

We can even perform an exhaustive search on the classifiers and the way of input normalization:

opt=perfCv4classifier('defaultOpt'); opt.foldNum=10; figure; tic; [perfData, bestId]=perfCv4classifier(ds, opt, 1); toc structDispInHtml(perfData, 'Performance of various classifiers via cross validation');

Elapsed time is 15.762848 seconds.

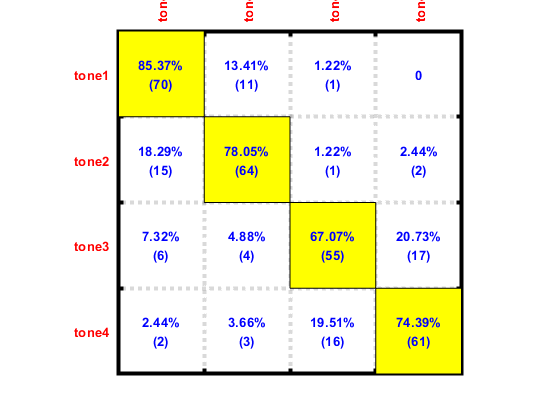

We can then display the confusion matrix of the best classifier:

computedClass=perfData(bestId).bestComputedClass;

confMat=confMatGet(ds.output, computedClass);

opt=confMatPlot('defaultOpt');

opt.className=ds.outputName;

figure; confMatPlot(confMat, opt);

Error analysis

We can dispatch each classification result to each segment, and label the correctness of the classification:

k=1; for i=1:4 for j=1:length(toneData(i).segment) toneData(i).segment(j).predicted=computedClass(k); toneData(i).segment(j).correct=isequal(i, computedClass(k)); k=k+1; end end

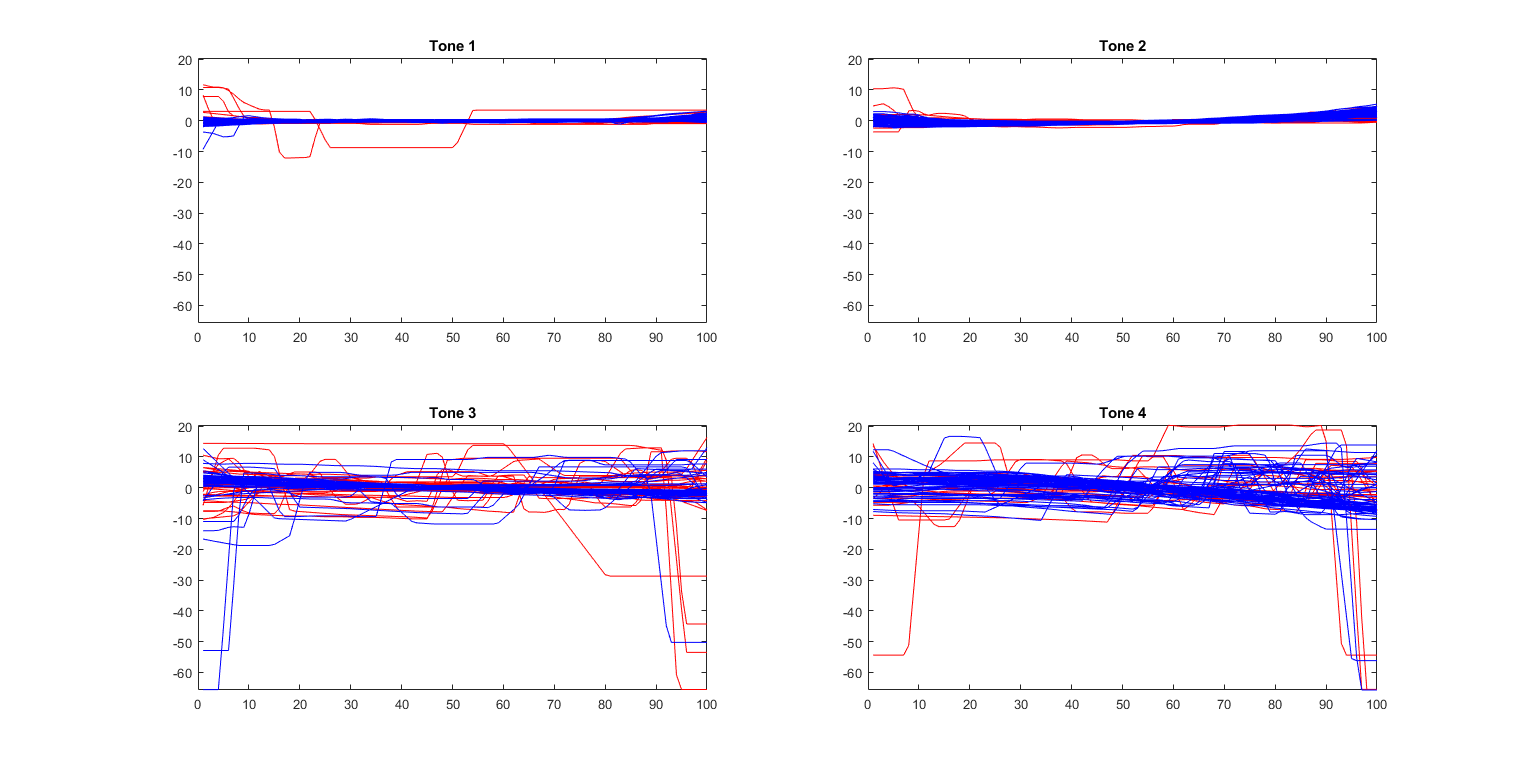

First of all, we can plot the normalized pitch curves for each tone:

figure; for i=1:4 subplot(2,2,i); index0=[toneData(i).segment.correct]==0; index1=[toneData(i).segment.correct]==1; pitchMat=[toneData(i).segment.pitchNorm]; pitchLen=size(pitchMat, 1); plot((1:pitchLen)', pitchMat(:,index0), 'r', (1:pitchLen)', pitchMat(:, index1), 'b'); title(sprintf('Tone %d', i)); end axisLimitSame; figEnlarge

In the above plots of normalized pitch vectors, we used "red" and "blue" to indicate the misclassified and correctly classified cases, respectively. As can be seen, some of the misclassified pitch curves are not smooth enough. Therefore if we can derive a smoother pitch curves, the overall accuracy of tone recognition may be improved.

Summary

This is a brief tutorial on tone recognition in Mandarin Chinese, based on the features derived from pitch and volume. There are several directions for further improvement:

- Explore other features, such as timber.

- Try the classification problem using the whole dataset.

Appendix

List of functions and datasets used in this script

Date and time when finishing this script:

fprintf('%s\n', char(datetime));

18-Jan-2020 20:54:28

Overall elapsed time:

toc(scriptStartTime)

Elapsed time is 60.927772 seconds.